Open AI has announced a new paid version of Chat GPT 4 that is available on their official website. This is the next large-generation language-based model. Which is technically the foundation of Microsoft Bing and AI chatbots. This new Chat bot has abilities to read images and videos as well. This chatbot also provides 100% more accurate information than the previous chatbot version 3.

In general, language models like GPT (Generative Pre-trained Transformer) are important. Because they are designed to understand and generate human-like language. Which has many practical applications. For example, they can be used for chatbots, language translation, content creation, and even medical diagnosis. The larger and more sophisticated the language model, the better it can perform these tasks.

In the case of GPT-3, it was a significant breakthrough in natural language processing. Due to its size (175 billion parameters). Its ability to perform a wide range of language tasks with impressive accuracy. It was able to generate coherent text, answer questions, and even write code. As such, many researchers and businesses have been exploring its potential applications and pushing the limits of what it can do.

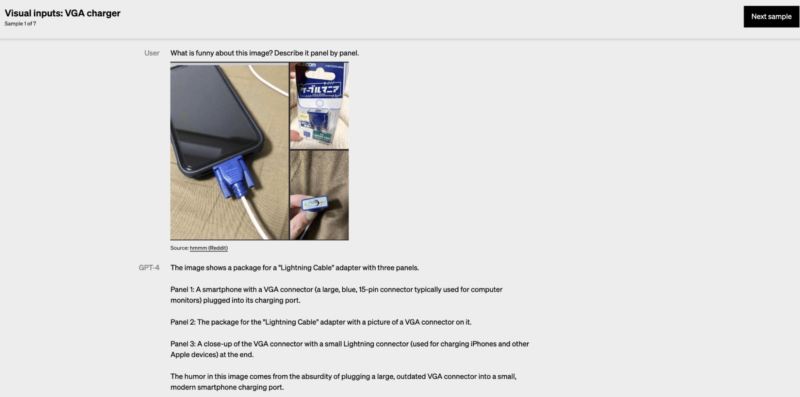

Is that GPT-4 Can See with Visual Input:

GPT-3, the previous iteration of the GPT series, did not have the capability to see images or perform image-related tasks. As it was primarily designed to process and generate natural language. While GPT-3 did demonstrate the ability to complete tasks that required some visual understanding. Such as generating captions for images, it was not explicitly trained on images and did not have the ability to process visual data.

But now, the new updated Chat GPT-4 has the ability to read images, and videos as well. Chat GPT 4 accepts the images as input, analyzes them, and generates results accordingly. GPT 4 language models, incorporate image processing capabilities to some extent. This leads to more advanced language models that can understand and generate language based on visual inputs. However, GPT-4 has the capability to see images.

GPT 4 Features:

- It is likely that GPT-4 has a larger number of parameters than its predecessor, GPT-3, which had 175 billion parameters.

- This would enable it to process and analyze more complex language structures. It performs a wider range of language tasks with greater accuracy and fluency.

- Furthermore, GPT-4 incorporates advancements in areas such as pre-training techniques, transfer learning, and fine-tuning. Which could help it learn more efficiently and adapt to new tasks with greater ease.

- It also has improved capabilities in areas such as natural language understanding, question-answering, and dialogue generation.

- In addition, GPT-4 chatbots incorporate some level of multimodal understanding, such as the ability to process and generate text based on visual inputs or other sensory data.

- This newly upgraded chatbot GPT 4 has vision models. Which could lead to more advanced language models that can understand and generate language based on visual inputs.